Summary: This article describes two blended learning (technology-rich) professional development workshops on course design for active learning to enable faculty development at a research university in the Southeastern United States. Specifically, the workshops were designed to address gaps in the international academic development literature, and so this article highlights one way to address related requirements, such as the need for systematic evaluation, provision of thick descriptions of academic development practices, and evaluation of the effect of different learning environments (physical classroom spaces) on teaching and learning outcomes. Hence, the workshops model evidence-based approaches for designing faculty development, including the systematic alignment of the workshops’ goals with qualitative and quantitative evaluations of the workshops’ effectiveness.

Keywords: Blended/Active Learning; Faculty Development; Systematic Evaluation; Active Learning Classrooms

Резюме (Самюел Олугбенга Кинг [США]: Ясный дизайн курса для активного обучения: эволюция метода «смешанного обучения» с целью подготовки педагогических кадров):Данная статья описывает два (технологически богатых) практических семинара для развития профессиональной квалификации. Речь идет о курсе дизайна для активного обучения, который дает возможность профессионального развития педагогических кадров в научно-исследовательском университете на юго-востоке Соединенных Штатов Америки. В частности, разрабатываются семинары с целью ликвидации пробелов в международной академической литературе. Тем самым статья показывает возможности того, как можно справиться с техническими требованиями, например, необходимостью систематического оценивания, подготовкой обширных описаний академических проектных практик и оценкой влияния различных окружений обучения (классных комнат) на его результаты. Поэтому семинары моделируют ясные методы для подготовки педагогических кадров, включая систематическое снабжение целей семинаров качественными и количественными оценками эффективности семинаров.

Ключевые слова: Смешанное / активное обучение; подготовка педагогических кадров; систематическое оценивание; активные классы обучения

Zusammenfassung (Evidenzbasiertes Kursdesign für lebendiges Lernen: Evaluation eines Blended-Learning-Ansatzes zur Aktivierung der Lehrkräfteentwicklung): Dieser Artikel beschreibt zwei Blended Learning (technologiereichen) Workshops zur beruflichen Entwicklung. Es geht um ein Kurs Design für aktives Lernen, das die Entwicklung von Lehrkräften an einer Forschungsuniversität im Südosten der Vereinigten Staaten ermöglicht. Insbesondere wurden die Workshops entwickelt, um auf Lücken in der internationalen akademischen Literatur zu reagieren. Damit zeigt dieser Artikel Möglichkeiten, technische Anforderungen zu meistern, wie zum Beispiel die Notwendigkeit einer systematischen Auswertung, die Bereitstellung umfangreicher Beschreibungen der akademischen Entwicklungspraktiken und die Bewertung der Auswirkungen unterschiedlicher Lernumgebungen (physischen Klassen-Räume) auf Lehr- und Lernergebnisse. Daher modellieren die Workshops evidenzbasierte Ansätze für die Gestaltung der Lehrkräfteentwicklung, einschließlich der systematischen Ausrichtung der Ziele der Workshops mit qualitativen und quantitativen Evaluationen der Workshopwirksamkeit.

Schlüsselwörter: Blended / Aktives Lernen; Lehrkräfteentwicklung; Systematische Evaluation; aktive Lernklassen

Introduction

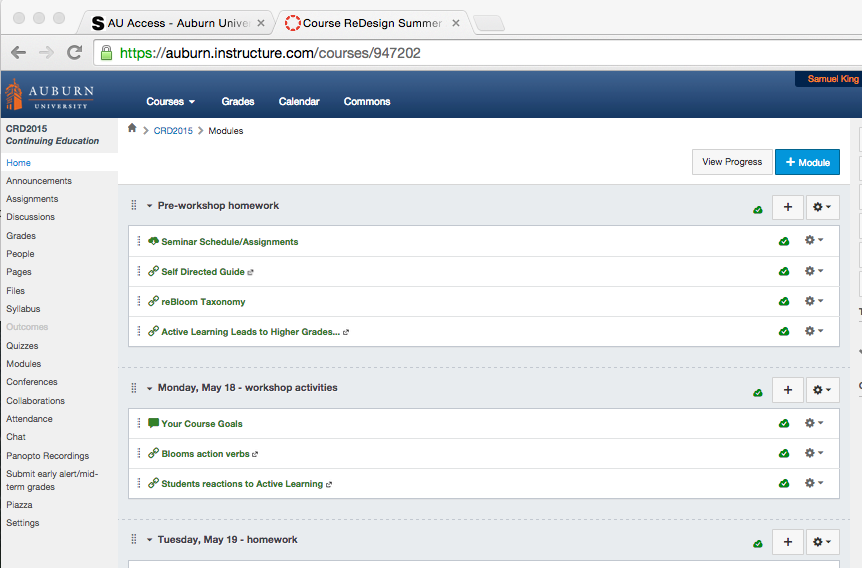

This article will focus on the description of two professional development workshops on active learning for faculty at a large research university in the Southeastern United States. In particular, the article will highlight the evidence-based approach that was adopted for the design, implementation, and evaluation of the workshops, which were predicated on blended learning, including the use of Canvas LMS-focused activities (Figure 1), integration of technology for workshop activities, and the use of two technology-rich classrooms as workshops’ implementation sites.

Figure 1: A cross-section of the home page for the Canvas LMS-hosted course design for active learning faculty development workshops.

Defining Active Learning

In this article, active learning is conceptualized as student-centered instruction (e.g., U.S. Department of Education, 2010; Anderson et al., 2011). Hence, only educational technologies, including active learning classrooms (e.g., Valenti, 2015), that support and promote student-centered pedagogies are considered as being a component of the active learning instructional ecosystem. Further, blended learning (or flipped classes) is construed as representing instructional pedagogies that confer two advantages over traditional instruction. First, they promote student-centered instruction, and second, they provide more differentiated teaching and learning opportunities (e.g., Jaschik & Lederman, p. 8; see also Fishman et al., 2013; Freeman, et al., 2014; Drake et al, 2014).

The next section will focus on a description of the trends in active learning in higher education in the United States. Then based on the literature review, the importance and necessity for evidence-based approaches to designing faculty or professional development activities, including technology-rich workshops, in higher education will be presented.

Literature Review

The efficacy of active learning, including technology-rich pedagogies, is no longer in doubt (Froyd, 2007; Means et al., 2010; Ciaccia, Tsang & Handelsman, 2011; Fishman et al., 2013; Lawless & Tweeddale, 2015). For example, a meta-analysis of 225 empirical studies, the largest to date, clearly establishes that student achievement is higher and failure rates are lower when students learn under active learning conditions in contrast to “traditional lecturing” (Freeman, et al., 2014). Other studies have established that active learning is beneficial for lower performing students (Weltman & Whiteside, 2010), and for the attainment of conceptual understanding of subject matter (Prince, 2004), among other benefits.

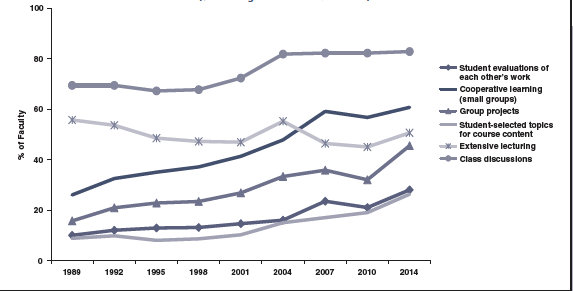

This recognition of the advantages of active learning is tacitly mirrored in the growing uptake of such approaches in undergraduate education. For example, a recent survey of “16,112 full time undergraduate teaching faculty members at 269 four-year colleges and universities” (Eagan et al., 2014) indicates that only about half (50.6%) of all faculty still prefer a “heavy reliance on lecture” (p. 6) as the main mode of undergraduate instruction; while about four out of five courses now feature “class discussions” (Figure 2).

Figure 2: “Changes in faculty teaching practices, 1989 to 2014: The Y-Axis refers to “% of Faculty” marking “All” or “Most” courses on survey” (Eagan et al., 2016, p. 6)

Similarly, a recent large-scale survey of faculty and campus administrators (Jaschik & Lederman, 2014) indicates that 78% of faculty use learning management systems to incorporate some form of blended learning into their courses (p. 8). In addition, “half (51 percent) of faculty believe improving the educational experience for students by introducing more active learning in the course is a very important reason for converting face-to-face courses to blended or hybrid courses [while] about one in three professors have taught an online course” (p. 8). Moreover, active learning classrooms or ALCs (Figure 3), which prioritize student-centered teaching and learning, are being used in more than 40 institutions across North America (McGill University, 2015). Hence the data clearly shows a convergence towards the use of active learning approaches, perhaps also as a response to the clarion calls for more student-centered instruction by national institutions in the United States (e.g., U.S. Department of Education, 2010; Anderson et al., 2011).

Subsequently, many institutions in the global North and South (Australia), especially through their Centers for Teaching and Learning, now offer professional development for course design to enhance faculty capacity to use active learning-based instructional approaches. However, there is a need for “faculty training in teaching that is predicated on evidence-based approaches” (Anderson et al., 2011, p. 152) to designing active learning professional development activities (workshops, seminars, etc), especially at research universities. For example, Kane, Sandretto, and Heath (2002) claimed “many academics have had little or no formal teacher education to prepare them for the teaching role” (p. 181). Second, Curwood (2014) echoes this training deficiency for the use of technology also by asserting that the “professional development available to teachers remains “woefully inadequate” (p. 10). This assertion is supported by Palsole and Brunk-Chavez (2012) who stated that “the reality is that many college faculty are not prepared to teach in this blended environment [so as to] replace the traditional sage-on-stage approach with more student-centered pedagogy” (p. 18).

Third, recent research studies have likewise highlighted the lack of sufficient empirical evidence for the efficacy of ALCs, including the need for comparison of different learning environments, and also for aligned professional development to maximize their use (Valenti, 2015; Drake et al., 2014; Whiteside, Brooks & Walker, 2010; Whiteside & Fitzgerald, 2008). Fourth, empirical and conceptual reviews of the educational development literature clearly indicate that professional development practices are often not well articulated to facilitate (re)enactment or replication. For example, Amundsen and Wilson (2012) reported that they “did not find much evidence of practice systematically building on other published reports of practice” (p. 113) and subsequently highlighted the need for professional development research articles that include “sufficient detail of the practice and providing a model for what to include so that others will be able to build on it” (p. 113).

Last, another acute deficiency is the paucity of systematic evaluation of professional development activities that go beyond “satisfaction ratings” (Amundsen & Wilson, 2012, p. 95) or “the commonly used ‘happy sheet’ form of workshop feedback” (Bamber, 2008, 108). For example, Amundsen and Wilson (2012) advocate evaluation measures that include articulation of the “core characteristics of the design” together with “a detailed account of procedures or activities that that had been put in place to determine effectiveness” (p. 95).

Therefore, this article is quintessentially a case study about how two workshops on active learning at a large research university in the Southeastern United States was designed, implemented and evaluated based on research evidence on how to design effective professional development to support faculty learning. Specifically, the study will highlight how the blended learning approach of the workshops were designed and implemented to support efficacious technology integration to support instruction, and briefly describe a comparison of two different learning environments, including an ALC. Moreover, the short term (mixed-question survey) and long term evaluation measures that have been adopted to measure effectiveness will also be described. Last, this case study will include sufficient details about the workshops’ goals, design, implementation, and evaluation to facilitate (re)enactment or replication.

Workshops’ Goals (Anticipated Workshop Outcomes) and Faculty Recruitment

Two active learning course (re)design (CRD) workshops for faculty at a large research university in the Southeastern United States were held by the institution’s Center for Teaching and Learning (CTL) over the summer of 2015. One workshop (3-Week CRD) occurred over three weeks and consisted of three full days of professional development for faculty per week. The other workshop (1-Week CRD) consisted of three full days of professional development for faculty over one week.

Faculty Recruitment

Faculty for both the 3-Week CRD and 1-Week CRD were selected through an application process, with the application process for the former being competitive, i.e., 15 faculty were selected from a pool of 30 applications after a month-long period of university advertising. The successful 3-Week CRD applicants had the additional incentive of receiving a grant of $5,000 to be paid in two installments. The grant incentive also served as an evaluation measure, as would be explained later in the Evaluation section (Section 10). In contrast, the 11 faculty who were selected for the 1-Week CRD only had to complete an application.

Workshops’ Goals (Anticipated Workshop Outcomes)

The three integrated goals for the two workshops, which subsequently informed the design, implementation and post-implementation evaluation processes, are as follows (Boyd, 2015):

- Design a new or re-design an existing course using learner-centered design principles, basically the Backward Design model for Significant Learning Course Design (Fink, 2005; see also Fink, 2003). The evaluation of the achievement of this goal was based on the ability of the participating faculty to (re)design their courses using the Backward Design model (See Section 7);

- Be prepared to teach and flourish in Auburn University’s new and burgeoning (technology-rich/blended learning) environments as identified in the Auburn University Strategic Plan. The evaluation of the achievement of this goal was based on the faculty quantitative ratings of the active learning approaches modeled during the workshops, as well as a quantitative comparison of the two different learning environments that were used for the two workshops (See Sections 9 and 10);

- Become a part of an ongoing cohort committed to “making teaching visible” by sharing teaching innovations, using research to shape scholarly teaching endeavors, documenting success (in presentation and publication), and/or inviting colleagues into their classes. The evaluation of the achievement of this goal is presented in Section 8.

Hence the short-term goals for the workshops were to enable and evaluate the participating faculty’s capacity to (re)design their courses using the Backward Design model. The immediate workshops’ goals also included an evaluation of faculty plans to evaluate or track the impact of the newly designed courses on their student learning outcomes. Meanwhile, the long-term goals include longitudinal tracking of faculty course design implementation plans, and evaluations of the possible effects of learning environments on instructional efficacy.

Workshops’ Integrated Design: Backward Design Model

The three integrated Workshops’ Goals, as earlier outlined, informed the process through which faculty applied to participate in the workshops, selection of workshop (faculty) participants, the course design model employed during the training, the actual implementation, and evaluation measures. The theoretical framework for the two faculty development workshops for active learning is hereby described.

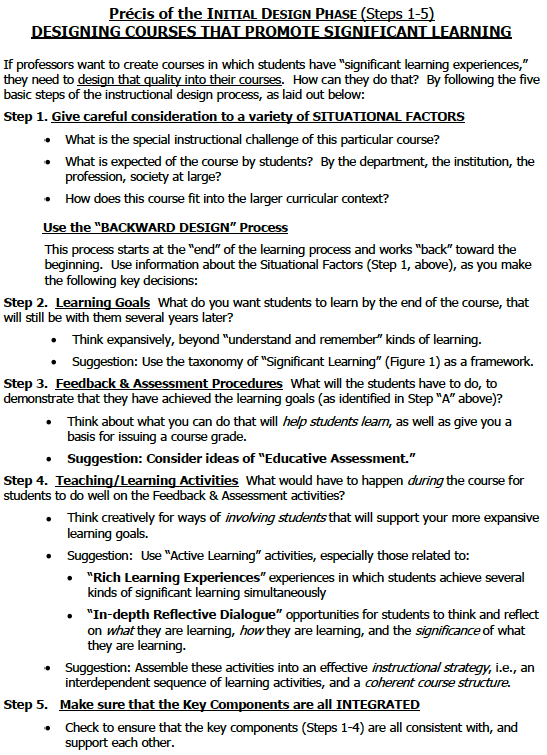

The two workshops were designed to implement the Backward Design model for Significant Learning Course Design (Fink, 2005). Although there are other approaches to designing courses, e.g., Competency-Based Education (Krause, Dias & Schedler, 2015), or the Accreditation Board for Engineering and Technology (ABET’s) Outcomes-Based Assessment (Mead & Bennett, 2009), the Backward Design model was adopted because it is based on reliable research, has been widely used in higher education, and is trans-disciplinary. The main components of the Backward Design model (Figure 3), and which were adopted as the anticipated learning outcomes for the workshop participants, are (Fink, 2005, p. 5):

- Identification of the situational factors that can impact the success of course (re)design;

- Creation of student learning goals for course;

- Creation of educative assessment and feedback that are aligned with the student learning goals; together with

- Creation of aligned learning activities; and finally

- A rigorous check to ensure that all four components – situational factors, learning goals, educative assessment and feedback, and learning activities- are integrated and reinforce each other to support significant teaching and learning outcomes.

It is imperative to highlight at this juncture that with respect to design, the main differences between the two workshops are duration (one and three weeks); the classrooms or sites in which the workshops occurred (an ALC for the 3-Week CRD, and a scaled down or marginal ALC for the 1-Week CRD); and faculty incentive for Workshop participation (the 1-Week CRD faculty participants did not receive any monetary grants or incentives).

Figure 3: An overview of the Backward Design model for designing active learning courses (data from Fink, 2005, p. 5).

Workshops’ Implementations

Structure

Both the 3-Week CRD and 1-Week CRD were implemented similarly. Both workshops were structured based on the Backward Design model. Therefore, faculty participants went through a structured sequence of workshop activities to help them identify situational factors for their courses, create learning goals, and then create relevant assessments paired with the provision of educative feedback. The summer schedule for the two workshops (1 and 3 weeks) were designed to maximize in-workshop activities, as corroborated by Palsole and Brunk-Chavez (2012) who “determined that offering Digital Academies for a week while classes were not in session would allow participants to focus on course redesign instead of being distracted by their current teaching and grading responsibilities” (pp. 21-22).

Blended Learning

All 15 faculty for the 3-Week CRD and 11 faculty for the 1-Week CRD were enrolled as students on the Canvas LMS (Figure 1), on two web-based modules that had been specifically created for the workshops. So to model flipped learning, for example, the research paper/Guide (Fink, 2005) for the Backward Design model was uploaded to Canvas and participants were required to the read the Guide prior to the first day of the two workshops. One of the initial learning activities on the first day of the workshops was a team-based quiz, Immediate Feedback Assessment Technique (http://www.epsteineducation.com/home/; Epstein et al., 2002), that assessed participants’ conceptual understanding of the components of the Backward Design model. The quiz was subsequently followed by classwide discussions.

Modeling Active Learning

Apart from the use of the flipped or blended learning model, quizzes, and classwide discussions, a number of other active learning approaches were modeled on the course. These included participants’ streaming into teams and blended learning tracks, the use of videos (e.g., Carol Dweck’s Growth Mindset Theory, in: https://www.youtube.com/watch?v=J-swZaKN2Ic), gallery walks, writing on glassboards and whiteboards, complete of Backward Design model worksheets, online Canvas discussions, use of concept maps (visual/graphic syllabus), microteaching with video recording, webinars, sharing of real time workshop artifacts and work on Apple TV, real time uploading of course process artifacts onto Canvas for collaborative sharing, and home work assignments, which were submitted via Canvas.

Learning Environments

The online learning environment for the two workshops was Canvas. Meanwhile, the face-to-face Workshop activities occurred in an ALC for the 3-Week CRD, and a marginal ALC for the 1-week CRD – marginal because although the venue had two Apple TVs for collaborative sharing via mobile devices, there were no round tables and chairs to facilitate mobility and team formation, while the classroom space was also more limited.

Figure 4: An active learning classroom (ALC) at Auburn University: Workshop participants (Faculty at Auburn University) working collaboratively at a glass board in the HCC 2213 ALC.

On the last days of the two workshops, evaluation surveys were administered to the participating faculty to gauge the effectiveness of the workshops. The evaluation protocol and the survey data will be discussed in the next section.

Workshops’ Effectiveness Evaluation Survey Design

Faculty for both workshops completed identical evaluation surveys. However, because similar data collection and analysis protocols were used for both workshops, and also because the data collected was similar, only the survey administration protocol for the 3-Week Workshop will be described in this section. The 1-Week Workshop data will be employed later when the effect of learning space is briefly investigated through the comparison of the two different learning spaces or venues used for the two separate workshops.

Survey Design and Data Collection

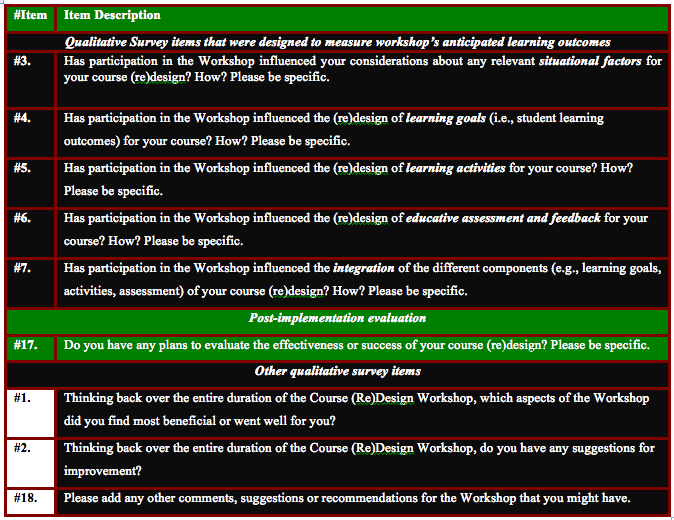

The web-based survey was created and administered through Qualtrics Surveys, and so faculty participants completed the surveys online during the last day of the 3-Week Workshop. The survey consisted of two qualitative and quantitative sections. The qualitative section was designed to evaluate participants’ progress with respect to the workshop’s anticipated learning outcomes (Section 3.2), and consisted mainly of five question items (Table 1). In addition, there were four other qualitative survey items (Table 1).

Similarly, 16 quantitative survey items were adopted and designed to provide an objective measure the perceived value of some of the active learning activities that were used during the workshops. For example, faculty were asked to rate the value of working in teams during the workshops:

#21. On a scale from 0-10, please rate the value of working in teams (i.e., working with colleagues at your regular table) to your professional development.

The results of this scaled measurement are presented in Sections 9 and 10.

Data Analysis

Thematic analysis was adopted for the data from the qualitative survey items. This was facilitated through the intentional alignment of the anticipated learning outcomes (Section 3.2) on the Backward Design process with survey items #3 through #7 (Table 1). So it was possible to evaluate, for example, the extent to which the workshop helped faculty identify or integrate learning goals in their course (re)design (Section 7.2). Further, sub-themes were identified from faculty responses to each of the five backward design survey items, as would be presented in Section 7.

In contrast, the data analysis of the 16 quantitative survey items employed the mean values of faculty rankings to provide objective measures of the perceived value of the active learning activities that were modeled during the workshops (Sections 9 and 10).

The results from the analysis of both the qualitative and quantitative survey items are presented next.

Workshops’ Effectiveness Evaluation Results: Qualitative Data on Anticipated Workshop Outcomes

The data presentation on the achievement of the anticipated workshop learning outcomes will be principally based on how faculty responded to the five survey items: #3, #4, #5, #6 and #7 that were earlier highlighted (Table 1).

Anticipated Workshop Outcomes (Situational Factors)

Faculty responses to #item 3 (“Has participation in the Workshop influenced your considerations about any relevant situational factors for your course (re)design? How? Please be specific.”) shows that four sub-themes are identifiable.

Theme 1: Awareness of the desirability of teaching in a classroom (physical learning space) that is designed to enable, instead of constrain, active learning or student-centered approaches. This theme is exemplified by comments such as:

The workshop has forced focus upon how limitations in my current allocated classroom space impact course delivery and the potential for incorporating active learning activities.

It inspired me to request EASL [active learning] classrooms. The number of students and their age led me to reconsider how I provide feedback so that I could do it quickly and meaningfully.

Theme 2: Awareness of the need to “work around” or “transcend” the limitations of the physical learning spaces:

Yes — the best way to answer this is you can’t fit a square peg in a round hole. Situational factors are a great parameter that allows for more tailored and creative solutions for teaching. No use in complaining about them — seek ways to transcend.

I was pretty familiar with situational factors to begin with, but if I’m not able to teach in an EASL classroom, I know their importance and got ideas for “work-arounds.”

Theme 3: Acquisition of skills and know-how to accommodate course goals in alignment with broader student learning requirements at the departmental, college or university level:

I will incorporate the university, college, and departmental student learning outcomes into my courses (never knew there were any). I will also discuss these outcomes with my students at the beginning of the course. I am pushing to improve the classrooms in our department to allow more student active learning.

I have thought more about how to assess student knowledge/comfort level going into my course. I also have a renewed interest in encouraging my colleagues to talk about our larger curriculum goals, so I can better ground my course goals within that context.

Theme 4: Provision of scaffolding and support for student-centered learning:

I will teach the course in the fall and spring. While the goals are the same, the spring group will need more scaffolding and support because they will have had fewer classes and experiences.

Yes: – the classroom layout will impact activities, interaction with the students and PPT design for lectures. – awareness of the student background on the specific topic and other skills (ex: literature review, reading research articles, oral presentations) will help taylor activities, assignments and expectations.

The workshop has given me the space and time to determine activities that could benefit all of the types of learners in my courses and turn these activities into a culminating project that can benefit a wide audience.

Anticipated Workshop Outcomes (Student Learning Goals/Outcomes)

The following two sub-themes are representative of faculty responses to #item 4 (“Has participation in the Workshop influenced the (re)design of learning goals, i.e., student learning outcomes for your course? How? Please be specific.”):

Theme 1: A shift from designing content-driven student learning outcomes to designing instead pedagogically aligned learning outcomes that are student-centered and skill-focused:

Absolutely. A focus on application and what students need five years out has completely changed learning goals from content centered to skill driven.

Yes. I have spent more time thinking about goals in terms of their long-term intellectual development, rather than as solely content driven.

Definitely. Outcomes will focus more on the learning process and the capabilities building, and less on this or that technology. I will try more to educate, rather than train students.

Theme 2: Reinforcement of student-centered learning outcomes through more fine-grained articulation and concrete definition of the relevant learning goals (e.g., connecting of the dots):

It has helped me to define them. However, the outcomes where always somewhere in my head. :-))

Yes (as explained earlier). With the current class, I’ve learned about the need to connect-the-dots for students so they grasp how content is informed by assessment.

I have been able to better articulate the goals of how/ why iPads will serve the learning outcomes in my course. Having the chance to use my iPad as a learner in this course has helped me understand real assists and issues with the tool.

Anticipated Workshop Outcomes (Learning Activities)

The following three sub-themes from faculty responses to #item 5 (“Has participation in the Workshop influenced the (re)design of learning activities for your course? How? Please be specific.”) are representative of how the workshops benefited the faculty participants with respect to designing and implementing effective learning activities in their courses:

Theme 1: Provision of specific learning activities, ideas, tools and resources:

Yes. – during the course I was able to write the first version of all my major assignments. Learning activities are incorporated in the assignments themselves, and in class activities preparing the students to work on the assignments. – during the course I was exposed to a wide range of activities (used in the course itself and microteaching of colleagues). Some can be immediately incorporated (the exploration of images to trigger students interest and engagement, quick group projects to break from the lecture and keep interest, self-reflection, peer-feedback prior to instructor feedback, … ) – learning activities that may not be immediately applied in the next semester may become possible in a different classroom environment (carroussel) or course in the future.

Yes! Carousel discussions, elegant text free slides, using art to generate a discussion, partnering to draw a robot– I got a lot of inspiration from working with my colleagues and especially through the micro teaching presentations.

I think I have a better handle on how to introduce large scale projects/activities, scaffold them, and encourage students how to self assess their work. I also have figured out how to improve on and better incorporate simple active learning activities I was already using.

Theme 2: Acquisition of skill in integrating learning activities with the anticipated student learning outcomes:

I now make sure that my activities contribute to the learning goals (do writing goals to improve student writing, do presentation to improve presentation skills, etc.)

Better aligned activities with goals — understand how these relate. And also see how to tailor activities to specific class content.

Theme 3: Confidence or motivation to implement (new) effective classroom learning activities:

Mostly, the workshop has given me a great deal of confidence in the way that I think as an educator. As a new faculty member and program coordinator, it can be daunting to design all of my courses from scratch. I have doubted myself some, but being in this workshop has given me a chance to express my ideas and confirm that I am going in the right direction. Thank you!

Before taking this seminar, I already had plans to drastically change my learning activities, This course reinforced my resolve to try the new activities.

Anticipated Workshop Outcomes (Educative assessment and Feedback)

The following three sub-themes from faculty responses to #item 6 (“Has participation in the Workshop influenced the (re)design of educative assessment and feedback for your course? How? Please be specific.”) are representative of how the workshops benefited the faculty participants with respect to designing and implementing educative assessment and feedback for their courses:

Theme 1: Provision of specific educative assessment examples, assessment rubrics’ creation, tools and resources:

I am planning to redesign my rubrics (another really helpful activity we did in the workshop) to grade written work on a large scale much more quickly without sacrificing the quality and substance.

Yes. – it gave me ideas on how to gather feedback at different stages of my course, such as surveys and discussions.

Closing the feedback loop by requiring the double journal: summarize my feedback and make a plan to fix it. Revolutionary idea!

Theme 2: The alignment of educative assessment and feedback with student learning outcomes and learning activities:

Yes. The altered learning goals have forced the assessment to change as well.

This is the first time I have truly thought of these aspects at the same time as I was designing the course itself. In the past I have really only considered student assessment and feedback in terms of how much grading I want to do in one week, how much feedback will keep the students happy, and what are the impacts of the semester calendar. It isn’t that I didn’t want to consider the actual learning/feedback process, it was more that I got bogged down in the logistics.

Yes. I think because I have a clearer idea of what I want the students to ultimately accomplish, I will be able to provide better feedback.

Theme 3: Guiding principles for providing fair but rigorous and actionable feedback:

Yes…. Actionable, Specific and Kind — ASK — really, really appreciate this as it will help provide students (and myself) prompts for peer assessment and assignment assessment.

I have to admit that my feedback was seldom actionable and I was grading students (e.g., 85%) because I had the feeling it was 85% rather than having a real “proof” for the 85%. I now understood how to develop and use rubrics to help me assess students but also give them “actionable” feedback that can help them to improve to get the missing 15%!

Anticipated Workshop Outcomes (Integration: Course Design Process Completion Checklist)

Finally, the main theme from faculty responses to #item 7 (“Has participation in the Workshop influenced the integration of the different components (e.g., learning goals, activities, assessment) of your course (re)design? How? Please be specific.”) provide indications of how the workshops helped faculty participants to check whether the different components of the course design process are holistically integrated:

Theme 1: Acquisition of skill in “conceptualizing” course design for active learning as a “holistic” process with different components that should result in an “integrated whole” course:

Yes, absolutely. Before this workshop, my ideas for the course felt active yet disparate. This workshop has increased my skills in conceptualizing an integrated whole.

Yes — the seminar takes a very holistic approach and that has helped provide a checklist of sorts to ensure that I’m hitting on most if not all components which makes a richer experience for me and for students.

I think the workshop has definitely influenced the integration of the different components. It’s funny how I know this, but I haven’t always been as deliberate in thinking about the integration. This is especially true sometimes the more one teaches a course. It is updated and changed a bit. Some things are kept that maybe are no longer as relevant. I’ve gotten rid of some things that I thought were good/important but now don’t make as much sense or with limited time not the best to include.

Yes. I now realize that this is something that can’t simply be assumed is happening.

Yes. I now pay greater attention to the goals and how activities and assessment are all tied together.

Theme 2: One faculty participant also specifically highlighted how the use of a visual syllabus (concept map) was very helpful in integrating his/her role and expectations for students:

The visual syllabus assignment was by far the most profound experience for me in terms of integrating my role and my expectations for my students throughout the course. Definitely a breakthrough moment and activity that I am going to use in my future course design as well as in my classes!

Workshops’ Effectiveness Evaluation Results: Qualitative Data on Post-Workshop Course Implementation Evaluation Plans

The main focus of the workshops was to facilitate and provide faculty with the tools, resources, and collegial interactions that they needed to build new or redesign existing courses. However, one of the workshop participation requirements was that faculty would develop relevant post-workshop course implementation evaluation measures. Survey item #17 (“Do you have any plans to evaluate the effectiveness or success of your course (re)design? Please be specific.”) was therefore designed to stimulate as well as provide insights on the plans that faculty had for evaluating the success or otherwise of their re-designed courses. Hence the data presented in this section is based on faculty responses to the survey item #17.

The results show that, in general, faculty post-course implementation plans include student evaluations of course impact on their learning, peer observation, and the adoption of the small group instructional feedback service (i.e., a consultant from the Center for Teaching and Learning at Auburn University visits a class to independently solicit student comments about a course, and subsequently meets with the instructor to discuss the results). Some faculty also had plans to conduct pre- and post-assessments of the impact of the newly designed course implementations on their students’ learning outcomes, as exemplified by the following comments:

Student feedback built into reflection discussion and papers:

I am going to invite every colleague I can to observe my class. I will have the GTAs I mentor observe as well. I will have a SGIF. I am also designing pre class surveys and post class surveys to gather data on how students experienced the class.

Yes, I believe I would like to try a pre/post. My classes are not very large, though. I might be able to access students from my first two years of teaching this course to compare to this next group.

I do not have a lot of pre-re-design data as this is my first year at Auburn and the last and only one time I taught this before I designed the course on a week to week basis. However, I plan to evaluate the learning effectiveness with pre- and post tests / interviews.

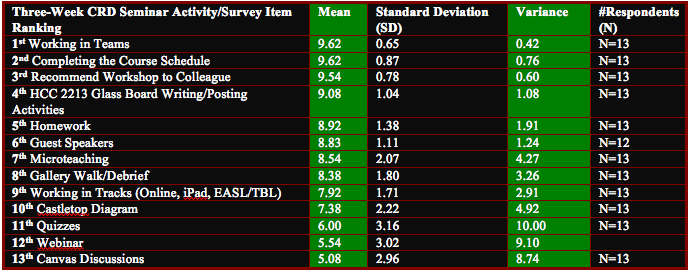

Workshops’ Effectiveness Evaluation Results: Quantitative Ranking of Active Learning Activities

This section will focus on the presentation of the rankings of the perceived value of the active learning activities that were modeled during the two workshops. The 3-Week CRD workshop participants’ (i.e., faculty) ranking of the active learning activities that were implemented during the workshop is presented in Table 2.

Table 2: Ranking of the active learning activities implemented during the CRD 3-Week Seminar by faculty participants

The results show that the most valued active learning activities (Mean>=9) were Working in Teams; Completing the Course Schedule (a completed course schedule essentially indicates that an instructor is prepared to implement a new or re-designed course); and the Glass Board Writing/Posting activities in the technology-rich active learning classroom, i.e., HCC 2213.

Workshops’ Effectiveness Evaluation Results: Effect of Learning Environment

As earlier indicated, the 1-Week CRD took place after the 3-Week CRD. Apart from the duration, the two workshops were roughly equivalent in terms of design (backward design model), content (e.g., similar active learning approaches), and implementation (e.g., use of canvas and extra-workshop activities and assignments, plus post-workshop requirements and expectations). Further, two lecturers who attended the 3-Week CRD also participated in the 1-Week CRD, otherwise the participating faculty for the two workshops were different. It should be noted that the two returning faculty did not receive any monetary incentive for participating in the 1-Week CRD.

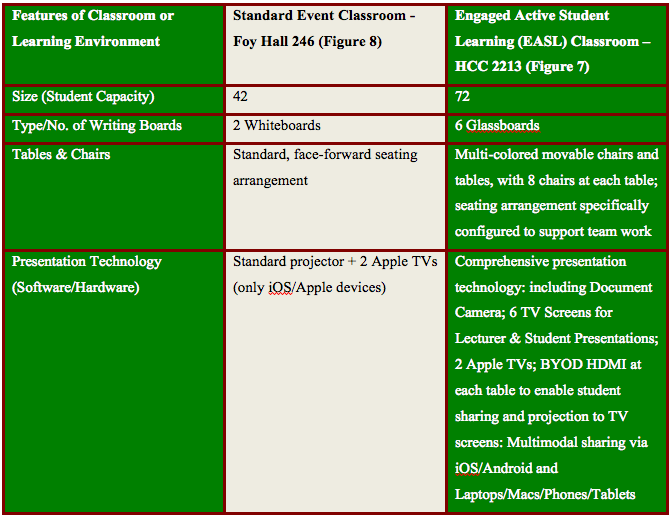

One main difference between the two workshops is the learning environment (Table 3): the HCC 2213 Engaged Active Student Learning (EASL) classroom was used for the 3-Week CRD, while the 1-Week CRD was held at the marginally active learning classroom, Foy 246.

So this section will address whether the difference in learning environments (i.e., classrooms or physical learning spaces) for the two workshops had any impact on the value of the active learning activities that were modeled as part of the workshops’ activities. This evaluation is informed by the recommendation from Whiteside, Brooks and Walker (2010) that “further research is needed to fully understand the relationships among different types of learning spaces and the complexities of teaching and learning [because] learning environments affect which teaching-learning activities occur in a class” (p. 15).

Using the mean values to compare the quantitative rankings of the active learning activities, the results show that the highest ranked activities (Mean>=8) are similar across both workshops. For example, Working in Teams and Completing the Course Schedule are ranked very highly across both workshops, suggesting that these outcomes are robust in the two different learning environments. Hence learning environment does not appear to have influenced the perceived value of the active learning activities. The fact that the results are comparable across both sets of faculty also suggest that the lack of a monetary grant (incentive) did not seem to have adversely influenced the outcomes observed for the participating faculty of the 1-Week CRD.

However, it is essential to clarify that the seating arrangement in Foy Hall 246 standard event classroom was re-configured during the 1-Week CRD to mirror the round-tables- and-chairs’ setting in the HCC 2213 EASL classroom. This is because the seating configuration of a learning space is a critical component of the corresponding teaching and learning outcomes. For example, a three year-empirical study on learning environments (Whiteside, Brooks & Walker, 2010) concluded that “both students and instructors reacted well to the technological and physical features of the ALCs, with the rooms’ round tables singled out for special praise” (p. 1).

Discussion and Conclusion

This article has focused on the design and implementation of two workshops on course design for active learning to support faculty development at a research university. The design and implementation of the blended learning workshops were predicated on addressing important research gaps in the academic development literature, specifically the (I) need for professional development programs on technology integration; and (II) the provision of sufficient details about faculty development programs to facilitate replication and transfer. This is the reason why thick descriptions of the design and implementations of the workshops were presented in Sections 3-6.

Further, the article has addressed other important research gaps in the faculty development literature, namely the (III) adoption of systematic evaluation protocols to measure the impact of professional development programs, including the need to (IV) address the effect of differential learning environments (i.e., classrooms or physical learning spaces) on learning outcomes.

To address the need for systematic evaluation in academic development, the article employed four short and long term workshops’ goals to illuminate the impact of the workshop on the professional development of the participating faculty. These workshops’ goals included:

- Evaluation of the extent to which the workshops enabled the participating faculty to design a new course or re-design an exiting course using the backward design model. The achievement or otherwise of this goal was evaluated through the qualitative sections of the workshop evaluation survey, as described in Section 7. In general, the results from the thematic analysis of the qualitative data indicated that the participating faculty were comprehensibly enabled to design their courses using the backward design model.

- Evaluation of the participating faculty plans about how they were going to evaluate the success of their courses after actual implementations with their students. The analysis of the relevant survey segment (Section 8) shows that faculty plan to evaluate the impact of the re-designed courses on student outcomes through measures, such as student evaluations, peer observations, and pre/post-student performance on relevant assessments.

- Measurement of the perceived value of the active learning activities used during the workshops through corresponding quantitative ratings by faculty. This quantitative measurement (Section 9) clearly highlighted that the most valued active learning workshop activities were Working in Teams, and Completing the Course Schedule for the newly re-designed courses.

- Measurement of the effect of differential learning/teaching environments on learning outcomes through a quantitative comparison of the faculty ratings for the active learning activities that were modeled during the two course design workshops. This quantitative comparison (Section 10) clearly indicated that outcomes were comparable across the two learning environments (Figures 7 & 8) used in this study.

Future Research: The study is designed to be longitudinal, so as to track the long-term effects of the course design for faculty development workshops on student learning outcomes at Auburn University. Hence, future studies will include data that will account for the possible effects or otherwise of differential learning environments, and course implementation fidelity to the backward design model on the respective student learning outcomes. Future studies will also report on the dissemination activities of the participating faculty on their course implementation progress.

Acknowledgment: The two workshops described in this study were designed and led by Dr. Diane Boyd; the author of this study, and Ms Betsy Gilbertson, were also workshops’ facilitators.

References

- Amundsen, C., & Wilson, M. (2012): Are we asking the right questions? A conceptual review of the educational development literature in higher education. Review of Educational Research, 82(10, 90-126.

- Anderson, W.A., Banerjee, U., Drennan, C.L., Elgin, S.C.R., Epstein, I.R., Handelsman, J., Hatfull, G.F., Losick, R., O’Dowd, D.K., Olivera, B.M., Strobel, S.A., Walker, G.C., & Warner, I.M. Changing the culture of science education at research universities. Science, 331, 152-153.

- Bamber, V. (2008): Evaluating lecturer development programmes: Received wisdom or self-knowledge? International Journal of Academic Development, 13(2), 107-116.

- Ciaccia, L., Tsang, T., & Handelsman, J. (2011): Summary of key papers on efficacy of active learning. Center for Scientific teaching: New Haven, Connecticut.

- Curwood, J.S. (2014): English teachers’ cultural models about technology: A microethnographic perspective on professional development. JournalofLiteracy Research, 46(1) 9–38.

- Drake, E., Battaglia, D., Kaya, T., & Sirbescu, M. (2014): Teaching and learning in active classrooms: Recommendations, research and resources (pp. 1-16). Retrieved from https://www.cmich.edu/colleges/cst/CEEIRSS/Documents/Teaching and Learning in Active Learning Classrooms – FaCIT CMU Research, Recommendations, and Resources.pdf.

- Eagan, M.K., Stolzenberg, E.B., Lozano, B.J., Aragon, M.C., Suchard, M.R., & Hurtado, S. (2014): Undergraduate teaching faculty: The 2013-2014 HERI faculty survey. Los Angeles, CA: Higher Education Research Institute, UCLA.

- Epstein, M.L., Lazarus, A.D., Calvano, T.B., Matthews, K.A., Hendel, R.A., Epstein, B.B., & Brosvic, G.M. (2002): Immediate feedback assessment technique promotes learning and corrects inaccurate first responses. The Psychological Record, 52, 187-201.

- Fink, L.D. (2005): Self-directed guide to designing courses for significant learning. Retrieved from http://www.deefinkandassociates.com/GuidetoCourseDesignAug05.pdf.

- Fink, L.D. (2003): Creating significant learning experiences. San Francisco: Jossey-Bass.

- Fishman, B., Konstantopoulos, S., Kubitskey, B.W., Vath, R., Park, G., Johnson, H., & Edelson, D.C. (2013): Comparing the impact of online and face-to-face professional development in the context of curriculum implementation. Journal of Teacher Education, 64(5), pp. 426-438.

- Freeman, S., Eddy, S.L., McDonough, M., Smith, M.K., Okoroafor, N., Jordt, H., & Wenderoth, M.P. (2014): Active learning increases student performance in science, engineering, and mathematics. PNAS, 111(23), pp. 8410-8415.

- Froyd, J.E. (2007): Evidence for the efficacy of student-active learning strategies. Project Kaleidoscope: Washington, DC.

- Jaschik, S., & Lederman, D. (2014): The 2014 Inside Higher ED survey of faculty attitudes on technology. Washington, DC: Inside Higher ED.

- Kane, R., Sandretto, S., & Heath, C. (2002): Telling half the story: A critical review of research on the teaching beliefs and practices of university academics. Review of Educational Research, 72, pp.177-228.

- Lawless, M.J., & Tweeddale, J. (2015): Active learning: Implementation strategies for high impact. Pearson: New York.

- McGill University. (2015): Teaching and learning experiences in active learning classrooms at McGill: Highlights. Retrieved from http://www.mcgill.ca/tls/spaces/alc.

- Means, B., Yukie, T., Robert, M., Marianne, B., & Karla, J. (2010): Evaluationof evidence-basedpracticesinonlinelearning:Ameta-analysisandreviewofonline learningstudies. Washington, D.C.: U.S. Department of Education, Office of Planning, Evaluation, and Policy Development.

- Palsole, S.V., & Brunk-Chavez, B.L. (2012): The digital academy: Preparing future faculty for digital course development. To Improve The Academy, 30, pp. 17-30.

- Prince, M. (2004): Does active learning work? A review of the research. J. Engr. Education, 93(3), pp. 223-231.

- U.S. Department of Education. (2010): Transforming American education: Learning powered by technology. Washington, DC: Author.

- Valenti, M. (2015): Beyond active learning: Transformation of the learning space. Educause Review, 31-38. Retrieved from http://net.educause.edu/ir/library/pdf/erm1542.pdf.

- Weltman, D., & Whiteside, M. (2010): Comparing the effectiveness of traditional and active learning methods in Business Statistics: Convergence to the mean. Journal of Statistics Education, 18(1), pp. 1-13.

- Whiteside, A.L., Brooks, D.C., & Walker, J.D. (2010): Making the case for space: Three years of empirical research on learning environments. Educause Quarterly, 33(3).

- Whiteside, A., & Fitzgerald, S. (2008): Designing spaces for active learning. Implications, 7(1). Retrieved from http://www.informedesign.org/_news/jan_v07r-pr.2.pdf.

- Boyd, D. (2015): Faculty course design seminar proposal. Biggio Center for the Enhancement of Teaching and Learning, Auburn University, Auburn, Alabama. Microsoft Word File.

About the Author

Dr. Samuel Olugbenga King: Postdoctoral Research Fellow at the Biggio Center for the Enhancement of Teaching and Learning, Auburn University, Auburn, Alabama, USA. Contact: sok0002@auburn.edu.